It is apparent that AI is one technology that will help businesses to be innovate, agile, innovate hence scale. The companies that become “AI-inside” will have the ability to synthesize information, the capacity to learn, and the capability to deliver insights at scale. According to new data from IDC Worldwide spending on artificial intelligence (AI) is forecasted to double over the coming for years to hit $110 billion by 2024!

As adopting AI is becomes a ‘must’, companies are quickly learning that AI doesn’t just scale solutions, but also scales risk dimension. Companies need a clear plan to deal with the new facet where data and AI ethics can pose problems if not addressed closely.

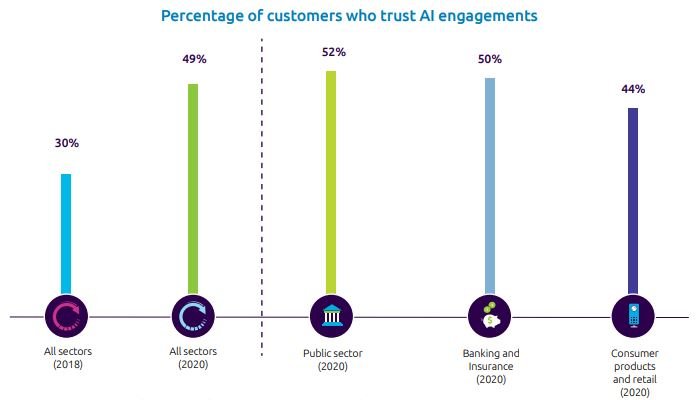

It’s interesting to note that in 2020, 50% of customers say that they benefited from personalized recommendations and suggestions, up from 26% in 2019.The share of customers trusting AI interactions is on the rise and will continue to do so, though it is still less than half Percentage of customers who trust AI engagements.

Enterprise adoption of Ethical AI:

Google was one of the first companies to pledge that its AI will only ever be used ethically – ie it will never be engineered to become a weapon. Google has published its own ethical code of practice in 2018 in response to widespread criticism over its relationship with the US government’s weapon program, which later was confirmed by its CEO in a public statement.

Google, Facebook, Amazon, Microsoft and IBM have come together to develop best practice for AI, with a big part of that examining how AI should be – and can be – used ethically as well as sharing ideas on educating the public about the uses of AI and other issues surrounding the technology.

The consortium explained further: “This partnership on AI will conduct research, organize discussions, provide thought leadership, consult with relevant third parties, respond to questions from the public and media, and create educational material that advance the understanding of AI technologies including machine perception, learning, and automated reasoning.“

Microsoft is also working closely with the European Union on the development of an AI regulatory framework, considered to be the first of its kind. The hope is to create something that offers the same sort of guardrails and principles that GDPR establishes around the use of data.

Tools that promote Neutrality ( sensitize Bias)

There are several tools out there to help developers and software designers in identify biases in their AI-based applications like:

- AI Fairness 360 – An extensible open source toolkit that can help you examine, report, and mitigate discrimination and bias in machine learning models throughout the AI application lifecycle.

- Adversarial Robustness Toolbox (ART) – A Python library for Machine Learning Security. ART provides tools that enable developers and researchers to evaluate, defend, certify and verify machine learning models and applications against the adversarial threats of Evasion, Poisoning, Extraction, and Inference.

- Sogeti’s Artificial Data Amplifier (ADA) – An AI-driven synthetic data generating solution, aids in combating and mitigating bias by generating synthetic data that can rebalance the original dataset, providing a more fair representation of minority groups in the data.

- Glassbox – Leverages open source libraries to check data sufficiency, quality, validates AI models against preset industry benchmarks, and measures a model’s resilience towards perturbations in inputs. Reports generated by the solution can help developers and users of AI fine-tune their models for accuracy, recalibrate them for new environments, and retrain them for new features and algorithms, thereby improving their resiliency.

- AI-RFX Procurement Framework – Created by Institute for Ethical AI & Machine Learning, is a set of templates to empower industry practitioners to raise the bar for AI safety, quality and performance.

- Not Equal Network – Aims to foster new collaborations in order to create the conditions for digital technology to support social justice, and develop a path for inclusive digital innovation and a fairer future for all in and through the digital economy.

Conclusion:

Creating “Accountable” AI is key for all the stakeholders in the business landscape. Bias is one of the major issue with AI, as its developed based on the choices of the researchers involved. This effectively makes it impossible to create a system that’s entirely “neutral” . Creating the need for ethical AI framework with built in accountability is key – not just for improving customer engagement and mitigating risks of unethical interactions, but also for actively using data and AI for the good purpose.

#AI #ML #Ethical #Bias #Neutral #DeepLearning #UserExperience #DigitalDisruption #FraudDetection #ConversationalAI #CognitiveIntelligence #NLP #RPA #ProductInnovation

#Strategy #Management #Consulting #Transformation #Technology #Outsourcing #CreativeDisruptions #EternalQuest #FindingTruth